[A] Today’s Build

We’re building a Production-Grade Prompt Engineering System that transforms LLM outputs into reliable, structured data:

Smart Prompt Constructor - Dynamic prompt templating with validation

JSON Parser Pipeline - Multi-strategy parsing with fallback recovery

Error Recovery Dashboard - Real-time monitoring of parse failures and auto-corrections

Structured Response Validator - Schema enforcement with confidence scoring

Building on L6: We extend the secure API client from L6 with intelligent prompt engineering and robust JSON extraction, turning raw LLM responses into production-ready structured data.

Enabling L8: This lesson provides the foundation for agent decision-making by ensuring LLMs return structured, parseable outputs that agents can reason over.

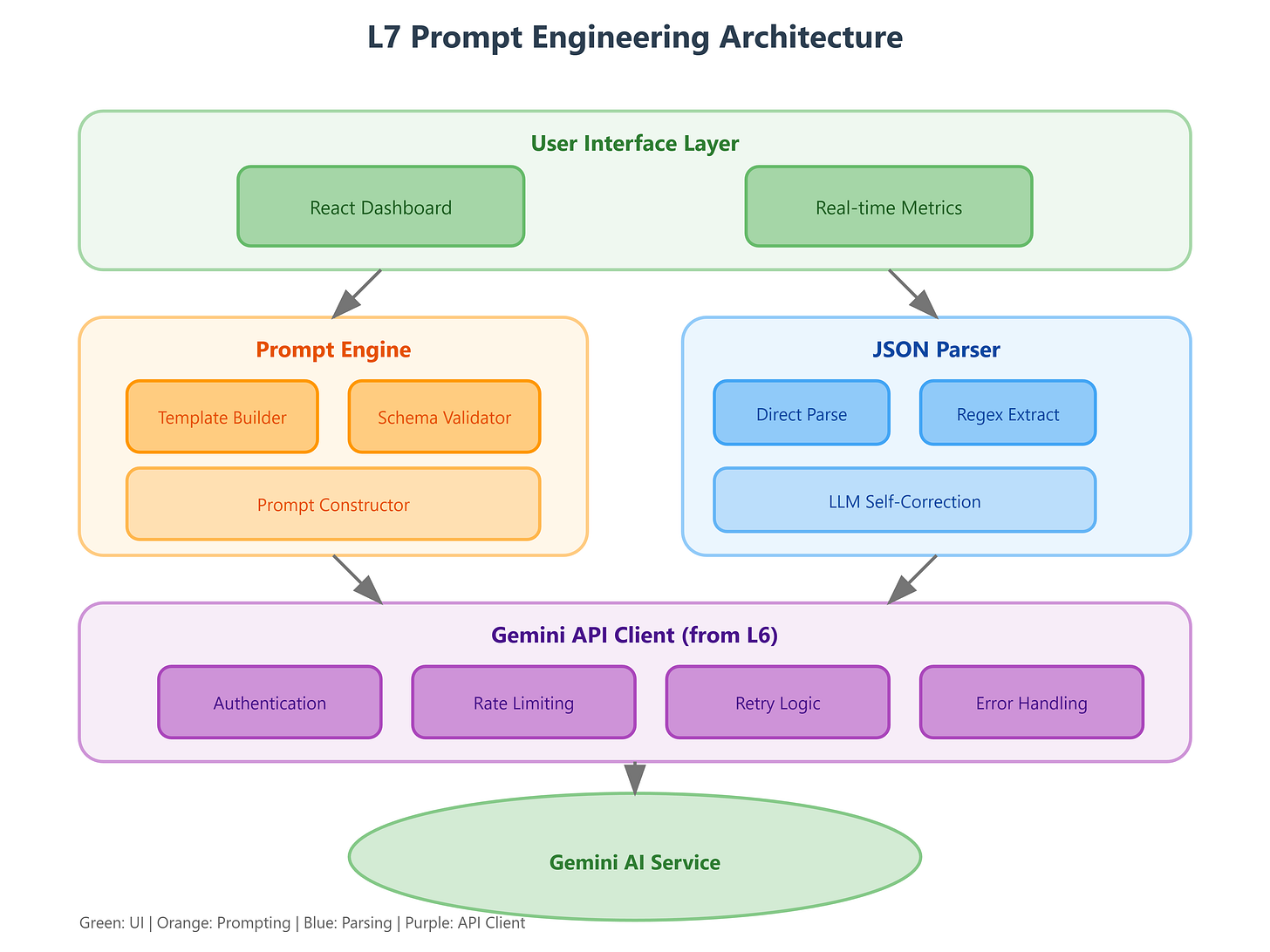

[B] Architecture Context

In the 90-lesson VAIA curriculum, L7 sits at a critical juncture where we transition from basic LLM interaction (L6) to autonomous agent behavior (L8). Module 1 focuses on LLM foundations—L7 specifically bridges the gap between raw text generation and structured agent reasoning.

Integration with L6: We leverage the authenticated API client, rate limiting, and retry logic from L6, adding a parsing layer that converts text responses into actionable data structures.

Module Objectives Alignment: By the end of Module 1, students will have a complete LLM interaction pipeline. L7 completes the “output transformation” piece, ensuring agents receive structured data rather than unpredictable text.

Component Architecture

Our system consists of four primary components:

PromptEngine - Manages templates, injects variables, validates structure

GeminiClient - Extended from L6 with schema-aware requests

JSONParser - Multi-strategy extraction with confidence scoring

ValidationPipeline - Pydantic schemas + business rule checks

Data flows from user requests → prompt construction → LLM API call → multi-tier parsing → validated output → agent consumption.