[A] Today’s Build

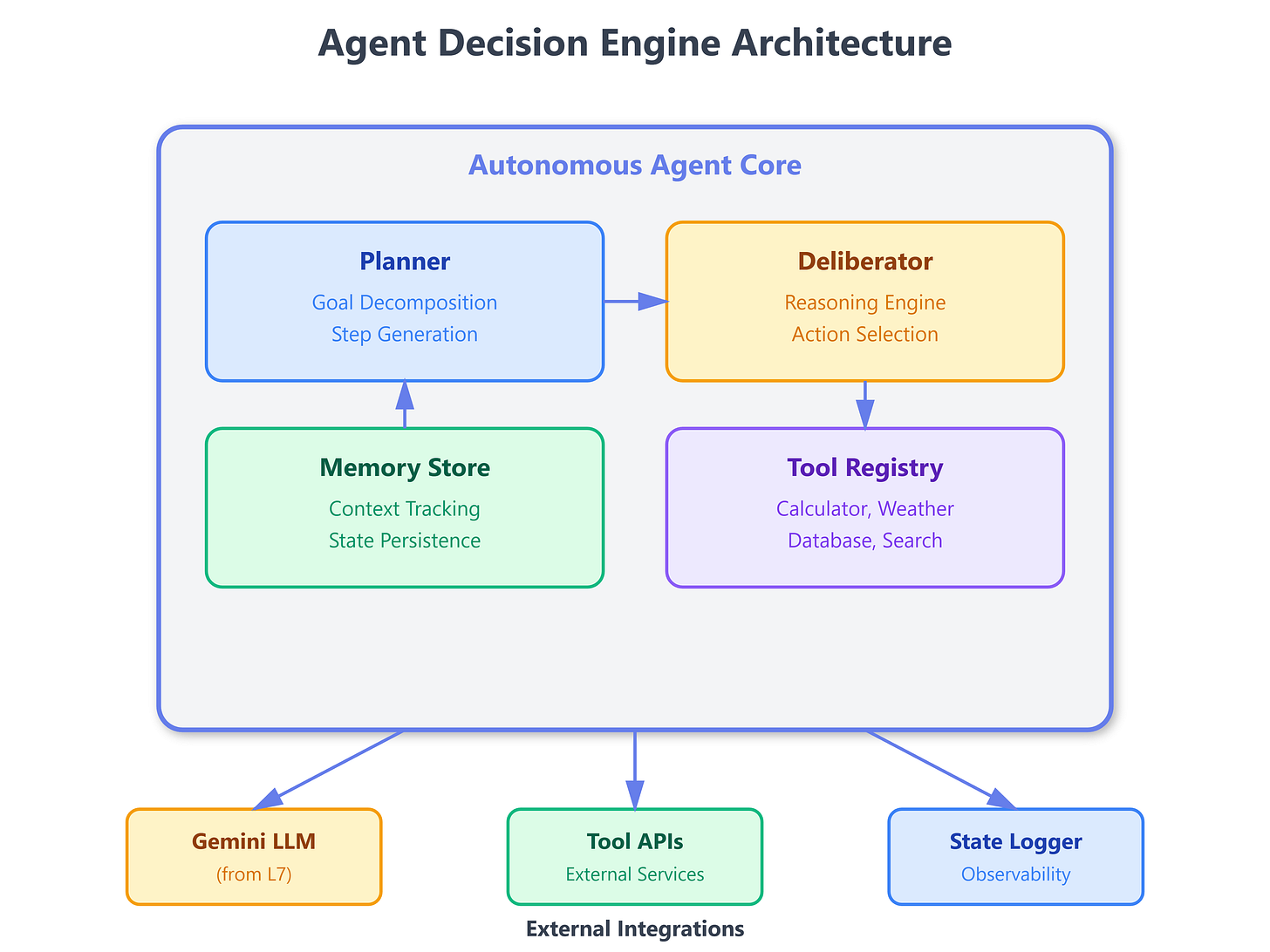

We’re building the conceptual and practical foundation for autonomous AI agents:

Decision-making engine that evaluates context and selects actions autonomously

Planning component that breaks complex goals into executable steps

Tool registry system that connects agents to external capabilities

Deliberation framework that traces reasoning paths for debugging and validation

Integration layer connecting to L7’s JSON-structured LLM communication

This lesson transforms L7’s prompt-response pattern into agent architecture. Where L7 gave us structured LLM output, L8 adds autonomy: the system now decides what to prompt, when to act, and which tools to invoke. This decision-making layer is critical—without it, you’re just calling APIs. With it, you’re deploying agents that operate independently within defined boundaries.

L9 will extend this with memory, letting agents remember past decisions and personalize responses. For now, we focus on the decision loop itself.

[B] Architecture Context

Position in 90-Lesson Path: Module 1 (Foundation), Lesson 8 of 12. We’ve covered Python setup (L1-L3), transformer architecture (L4), model comparison (L5), API patterns (L6), and JSON prompting (L7). Next: memory systems (L9), tool orchestration (L10), and error recovery (L11-L12).

Integration with L7: We reuse L7’s gemini_json_call() function that returns structured data. L8 wraps this in a deliberation loop: the agent decides what question to ask, parses the JSON response, and chooses the next action. The LLM becomes a reasoning engine, not just an API endpoint.

Module Objectives: By L12, you’ll deploy a complete VAIA handling multi-step workflows. L8 provides the decision-making core that coordinates between planning (L9-L10) and execution (L11-L12).